As the BioPharma industry evolves toward more data-intensive, portfolio-wide agile decision-making, under pressure to innovate and reduce the time to market, organizations that master bridging the questions of their staff to regulatory compliant, verifiable responses retrieved from their study data systems will move faster, decide smarter, and ultimately bring therapies to patients more efficiently, all while maintaining the regulatory rigor that patient safety demands.

The Difficulty of Meeting Ease of Use with Need for Rigor, Precision, & Compliance

In clinical research and development, data governance is non-negotiable. Every data point from preclinical studies, clinical trials, and biomarker assays must be meticulously managed, standardized, and secured to meet regulatory requirements and ensure scientific integrity.

This rigor is essential, yet it creates an inherent tension because these complex data systems are not easily accessed or used by all those who legitimately have a need to know.

Traditional clinical data platforms require users to master complex query interfaces, understand intricate metadata schemas, and navigate structured search parameters. Even seasoned researchers find themselves translating their questions into machine-readable syntax, spending valuable time on mechanics rather than science. Or worse, asking data assistants who return with the results after days or weeks, often having mis-interpreted the original intent. The knowledge exists within perfectly governed repositories, but accessing it remains frustratingly technical.

This isn’t a problem unique to any single platform, it’s an industry-wide challenge that demands architectural innovation. It can be solved provided a searchable repository that is compliant, and addresses all the stages and types of study data from post-discovery nonclinical in-vivo animal studies to human clinical trials along with their biomarker assays.

The Architectural Opportunity: Intelligent Interfaces for Governed Data

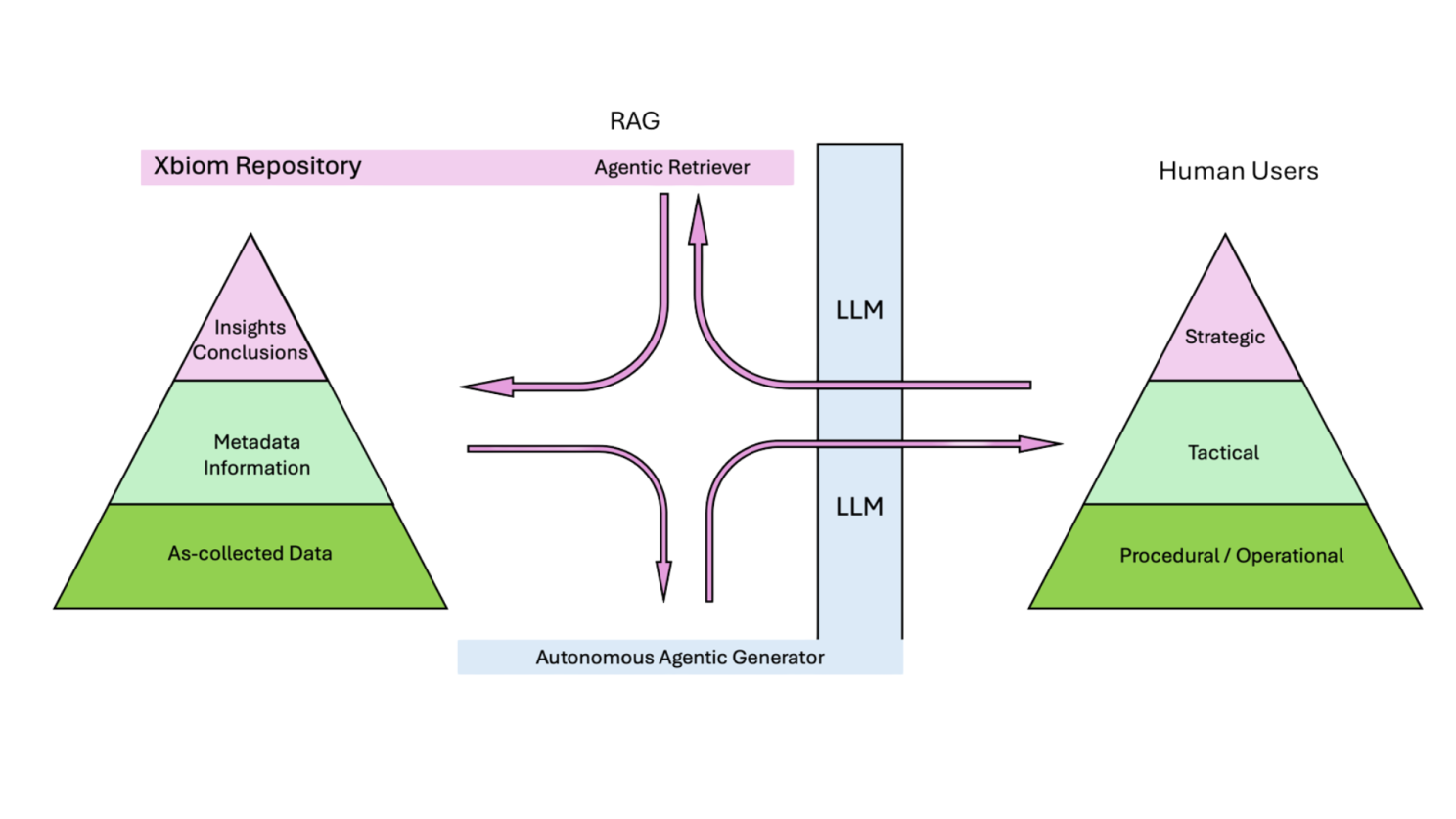

The emergence of Large Language Models (LLMs) combined with Retrieval-Augmented Generation (RAG) presents a solution that doesn’t compromise regulatory rigor but makes it accessible. This architectural pattern creates an intelligent translation layer between human inquiry and structured data systems.

The Three-Stage Translation Process

Modern implementations of this architecture operate through distinct stages:

- Intent Interpretation When a researcher asks “Show me dose-related adverse events from our oncology trials last quarter,” an LLM interprets the scientific intent: “dose-related” implies causality analysis, “oncology trials” requires therapeutic area filtering, and temporal context guides date ranges. This natural language understanding captures nuance that rigid query masks cannot.

- Structured Retrieval The RAG framework translates human intent into precise technical queries against the underlying data repository. This involves multiple operations: limiting the API access only to capabilities that is supported by the data systems, accessing study metadata, retrieving as-collected or standardized datasets, pulling relevant analytical outputs, or navigating to specific visualization tools. The key is maintaining the integrity and traceability of each data access.

- Contextualized Response Rather than returning raw query results, the system synthesizes responses in human-readable formats while preserving links to source data, compliance metadata, and supporting evidence, which is much needed in a regulatory environment. Critically, the conversation maintains context, allowing iterative refinement without losing the thread of inquiry.

A Working Example: The Whisperer Approach

The Xbiom platform’s “Whisperer” chat-box exemplifies this architecture in practice. It demonstrates how natural language interfaces can work with enterprise-grade clinical data systems while maintaining security and compliance.

Operational Layer- Data managers can query study status, validate data completeness, or retrieve specific Case Report Forms through conversational commands. Behind the scenes, the system accesses various applications including the Unified Data Model (UDM) repository for Data and Metadata. It may access various application and the status of the study data within, including their unstructured document and other files, the Smart Transformer, the Metadata Repository and the study data Visualization, with access to raw study data, analyzed metadata and intermediate end-points, and final TFLs. Study metadata and the trial designs, and visits are all ready for these queries.

Tactical Layer- Clinical operations teams access SDTM-standardized outputs, generate comparative visualizations across trial arms, or extract biomarker data, without memorizing API syntax. The Interactive Graphics Object (IGO) engine presents data in context, whether for internal reviews or regulatory preparation.

Strategic Layer- Decision-makers explore peer insights, identify trends, analyze treatment-emergent patterns, or review annotated Tables, Figures, and Listings (TFLs), through progressive dialogue that refines from broad inquiry to precise answer. The conversation itself becomes a discovery tool.Xbiom’s Three-Layer Architecture organizes content across three maturity levels that align with different user inquiry types:

What makes this implementation noteworthy isn’t the technology alone, it’s the preservation of governance throughout the interaction.

Intelligent Governance

Industry has a valid concern with AI interfaces and how compliance is maintained when users interact freely using plain language rather than following structured inputs? The answer lies in architectural design that enforces governance at every step:

Authentication and Authorization- All security checks occur before the natural language layer engages with an authenticated, authorized user. The LLM interface becomes a post-authorization tool, never a bypass mechanism.

Transparent Auditing- Every conversational query maps explicitly to API calls against the data repository. Audit trails capture not just what data was accessed, but the reasoning path that led there, a critical step for regulatory inspection.

Data Lineage and Provenance- Retrieved data maintains complete traceability to source. Whether accessing raw study data, transformed datasets, or derived insights, the chain of custody remains intact, verifiable and documented.

Validated Transformation- Data standardization and transformation occur through controlled, validated processes. The conversational interface doesn’t create new data, it retrieves governed data through established pathways.

Controlled External Exchange- For multi-organization studies involving CROs or partners, Virtual Data Rooms (VDRs) and similar secure exchange mechanisms ensure that natural language access doesn’t compromise controlled distribution.

It is all about accelerating adoption, and inviting everyone from strategic researchers to operation clinical operations to data managers and data scientists.

Why This Architecture Matters Now

Several industry trends make this architectural pattern urgent:

Data Volume and Complexity- Modern drug development generates large volumes of heterogeneous data such as multi-omic, immuno-markers such as cell-phenotyping, cytokines, ADA and other; clinical, nonclinical or Pre-IND, real-world evidence. Traditional query interfaces struggle to span this diversity efficiently.

Portfolio-Wide Analysis- Strategic decisions require cross-study, cross-indication insights. Asking “How do our Phase 2 safety profiles compare across our oncology portfolio?” should be straightforward, not a week-long data engineering or programming project.

Regulatory Evolution- As agencies embrace advanced analytics and real-world evidence, the ability to rapidly access, contextualize, and present data becomes competitive advantage. Speed cannot come at the cost of rigor, it must enhance it.

Knowledge Preservation- Institutional knowledge lives in annotations, peer reviews, and analytical narratives, often unstructured. Natural language interfaces can make this knowledge searchable without forcing it into rigid schemas that lose nuance.

Implementation Principles: What Works

Based on implementations like Whisperer and emerging industry patterns, several principles appear critical:

- Repository-First Design The governed data repository remains the source of truth. The LLM interface is a client, not a replacement for proper data architecture.

- Metadata Richness Effective natural language querying requires comprehensive metadata repositories, standards, terminologies, mappings, and relationships that help the system understand scientific context.

- Multi-Modal Response Users need different formats: downloadable datasets (CSV, XPT, JSON), interactive visualizations, summarized insights, or direct links to analytical dashboards. The architecture must support flexible packaging.

- Progressive Disclosure Initial responses should provide overview with pathways to detail. A conversation about adverse events might start with summaries but allow drilling into specific patient listings or statistical analyses.

Beyond Convenience: Strategic Implications

This architectural shift represents more than an improvement in the user’s experience, it fundamentally changes how increases adoption and changes how organizations leverage their data assets:

Democratized Access- Subject matter experts access data through their domain language, not database syntax. This doesn’t eliminate data specialists, it frees them to focus on complex analytical challenges rather than routine retrieval and data wrangling.

Accelerated Insights- When strategic questions can be explored conversationally in minutes rather than through multi-day data requests, the pace of decision-making transforms. Clinical development cycles compress.

Reduced Friction- Lower barriers to data access mean questions get asked and answered. Hypotheses get tested. Patterns emerge. The organization becomes more analytically agile without sacrificing control.

Enhanced Collaboration- Cross-functional teams can explore data together, in real-time, using natural dialogue. They can traverse studies within their therapeutic areas or let their research trace new paths to studies across the clinical and non-clinical for predictive safety. Traditional handoffs, clinical asking IT asking data science, collapse into direct exploration.

The Path Forward: Principles Over Products

The clinical research industry stands at an inflection point. The question isn’t whether natural language interfaces will become standard, it’s how quickly organizations adopt architectures that make them safe, compliant, and effective.

Success requires:

- Investment in foundational data governance – Unified data models, comprehensive metadata, validated transformations

- Security-first AI implementation – Authentication before interpretation, transparent audit trails, controlled access

- Hybrid expertise – Teams that understand both clinical science and AI architecture

- Iterative deployment – Starting with defined use cases, learning, expanding

Platforms like Xbiom’s Whisperer demonstrate that this vision is achievable today, not aspirational. The architecture works. The compliance holds. The value is real.

The real question for research organizations: Can you afford to leave institutional knowledge locked behind interfaces that require technical translation? Or is it time to let science speak in its own language, and let the systems keep up?